Ever feel like you're struggling with ChatGPT to get some information, but your friend just types in a question and BAM! Perfect answer?

It’s all about knowing how to ask the right questions — that’s the magic of Prompt engineering.

Let’s uncover the magic behind crafting effective prompts for seamless interactions with LLMs.

What are LLMs?

LLM stands for Large Language Model. Think of LLM as a big computer program designed to understand human language and generate responses that mimic human conversation.

These models can perform various tasks, including:

- Language Translation

- Text Classification

- Answering Questions

- Summarization

What are Prompts?

Prompts are the instructions to LLMs to guide them to a specific goal.

They are like a GPS to a driver who needs to reach a destination in an unfamiliar region.

The quality of your results depends on how well your prompt is crafted.

You can enhance the quality of a prompt by adding context, examples, and specific instructions.

Components of Prompts

A well-crafted prompt typically includes these components:

- Instruction: A clear task or direction for the model to follow.

- Context: External details or background information that guide the model to provide better responses.

- Input Data: The question or input you want the model to generate a response for.

- Output Indicator: The desired type or format of the output.

Crafting a Quality Prompt

Creating a prompt involves an iterative approach. You start with a basic prompt and then experiment by tweaking the prompt to achieve the desired results.

You should try using different keywords, data, and contexts when tweaking the prompt.

Example: Understanding Prompts

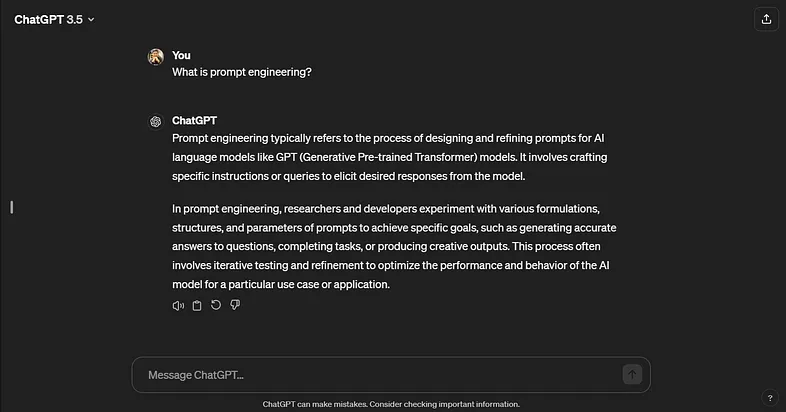

Imagine I’m eager to learn about prompt engineering, so I turn to ChatGPT for guidance.

- Initial Prompt: What is prompt engineering?

As a beginner, understanding this can be quite challenging, so I adjust the prompt accordingly.

- Revised Prompt: I am a beginner to language models. Could you explain prompt engineering to me in the simplest way possible, using examples?

Here, I’ve provided context about myself, indicated my difficulty in understanding complex concepts, and requested examples.

This alteration completely shifted the approach to addressing the topic.

In any prompt, the most important element is context. It helps the model understand more about the query by providing background information.

As seen in the example above, providing context about my skill level totally changed the way the question was answered.

Types of Prompting

The type of prompt you use can significantly influence the outcome. Here are some common prompting techniques:

- Zero-Shot Prompting:

This is simple prompting in which the user tries to interact with the model without any examples.

The user simply instructs the model to perform the task.

Zero-shot is more flexible as it requires no additional data preparation.

Use zero-shot for quick tasks where a perfect answer isn’t crucial. - Few-Shot Prompting:

In this technique, the user provides the model with a few examples of the task to be performed.

It requires additional data preparation.

Can be fine-tuned for specific tasks. - Chain of Thought (CoT) Prompting:

This technique involves encouraging the model to show its reasoning process by providing intermediate steps for the response.

It helps the model provide a more detailed and reasoned answer.

CoT prompting is like asking a student to show their work and explain each step they took to arrive at the answer.

Use Cases:

- Classification:

Assigning text, image, or video to predefined labels. LLMs first understand the text, match it to categories, and assign a label. - Summarization:

Creating a shorter version of a text document that captures the main ideas and essential information.

Conclusion:

By crafting well-defined prompts, you can effectively communicate tasks and achieve desired outcomes.

Experiment with different prompting techniques and iterate to find the most suitable approach for your needs.

If you’re interested in delving deeper into the world of prompt engineering, you might want to explore the website promptingguide.ai.

Futher Reading

Optimal Asset Allocation

Asset allocation is an approach of spreading investment across different asset classes instead of investing on one, its a strategy to balance risk and potential return over a given time period. This involves a mix of stocks, bonds and cash, or money market securities.

Transitioning from TypeScript and Python to C#: Key Takeaways

Transitioning to .NET over the past few months and diving into C# to create web APIs has been an exciting shift from my journey with TypeScript and Python. I’ve been using ASP.NET Core for minimal APIs, specifically leveraging the ICarterModule